What I think it will certainly end up doing is producing a 'floor.' Give the bot a few million cycles, and it'll put out a generic piece of media for you.

If human creators can't make something better than that, they won't be able to make a profit anymore.

Past that, who knows?

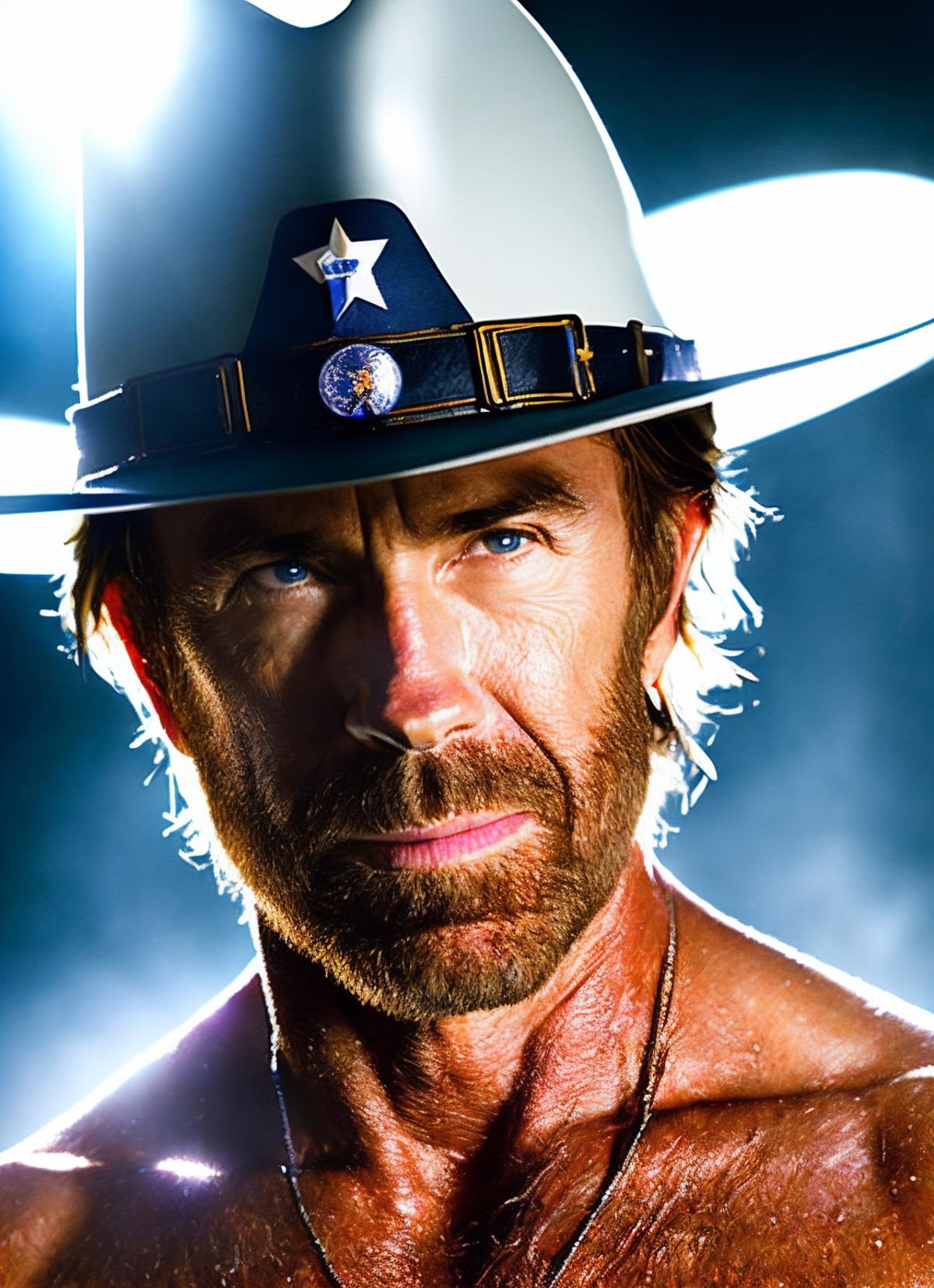

I'd tend to agree with this, it's how other automation systems have gone in the past. Except for the "generic" part as we're already well past that point. You don't want generic, you want Chuck Norris, or maybe Daisy Ridley's your waifu for some reason?

LyCORIS of Chuck Norris

civitai.com

Another old project from my archive. I'm aware there's already another Daisy Ridley Embedding by Balbrig , but I figured the results are different ...

civitai.com

We got AI trained to put Chuck Norris' face on whatever body you want, Daisy Ridley in whatever position you please. That's how they did the Donald Trump arrest fakes. But what if it's not a character who's your waifu, it's a style? You say you love the 80s-90s early anime aesthetic?

Esthetic 90s style (and a little 80s) New version with increased amount of data 1.3RW, 1.4RW, 1.5RW on Patreon I made a video on how to achieve the...

civitai.com

AI's been trained to produce that exact style. Or maybe you're too young for the early anime and want clone wars?

Originally posted to HuggingFace by questcoast Style Use token clone wars style for the overall style. Characters The model was trained to recogniz...

civitai.com

Yeah, the whole idea that it produces generic art died a month or two ago. Further you can plug these processes together to create entirely new, but entirely consistent results, easily, to produce Daisy Ridley in the Clone Wars style or your own comic featuring Chuck Norris in a 90s anime style.

As for past that, the floor is going to

keep rising. A few naysayers keep acting like what it can do this week is the limit and AI won't grow from here. Sometimes they insist there's some magical tech limits, often insisting AI will never be able to do things it's doing right now*. This just isn't true, in the space of two months we've gone from "Doesn't have enough continuity for static comic books" to "The animation is incredibly janky" to "I can still see some artifacts but it's getting smoother."

As the floor keeps rising, jobs for humans get ever more scarce and wages keep depressing.

*Mocking them will never get old for me.